From Prediction to Reasoning: Evaluating o1’s Impact on LLM Probabilistic Biases

[ad_1]

Large language models (LLMs) have gained significant attention in recent years, but understanding their capabilities and limitations remains a challenge. Researchers are trying to develop methodologies to reason about the strengths and weaknesses of AI systems, particularly LLMs. The current approaches often lack a systematic framework for predicting and analyzing these systems’ behaviours. This has led to difficulties in anticipating how LLMs will perform various tasks, especially those that differ from their primary training objective. The challenge lies in bridging the gap between the AI system’s training process and its observed performance on diverse tasks, necessitating a more comprehensive analytical approach.

In this study, researchers from the Wu Tsai Institute, Yale University, OpenAI, Princeton University, Roundtable, and Princeton University have focused on analyzing OpenAI’s new system, o1, which was explicitly optimized for reasoning tasks, to determine if it exhibits the same “embers of autoregression” observed in previous LLMs. The researchers apply the teleological perspective, which considers the pressures shaping AI systems, to predict and evaluate o1’s performance. This approach examines whether o1’s departure from pure next-word prediction training mitigates limitations associated with that objective. The study compares o1’s performance to other LLMs on various tasks, assessing its sensitivity to output probability and task frequency. In addition to that, the researchers introduce a robust metric—token count during answer generation—to quantify task difficulty. This comprehensive analysis aims to reveal whether o1 represents a significant advancement or still retains behavioural patterns linked to next-word prediction training.

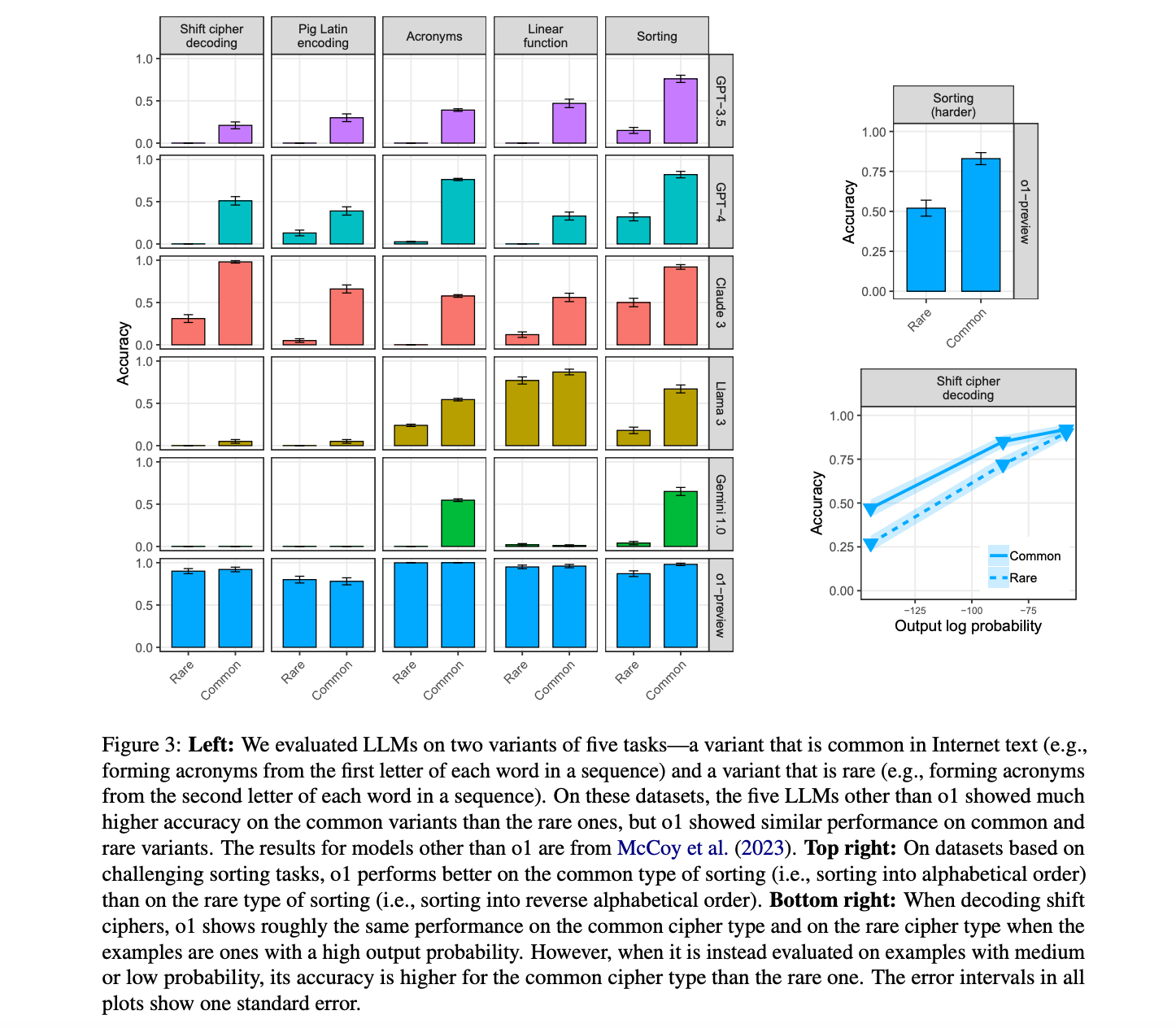

The study’s results reveal that o1, while showing significant improvements over previous LLMs, still exhibits sensitivity to output probability and task frequency. Across four tasks (shift ciphers, Pig Latin, article swapping, and reversal), o1 demonstrated higher accuracy on examples with high-probability outputs compared to low-probability ones. For instance, in the shift cipher task, o1’s accuracy ranged from 47% for low-probability cases to 92% for high-probability cases. In addition to that,, o1 consumed more tokens when processing low-probability examples, further indicating increased difficulty. Regarding task frequency, o1 initially showed similar performance on common and rare task variants, outperforming other LLMs on rare variants. However, when tested on more challenging versions of sorting and shift cipher tasks, o1 displayed better performance on common variants, suggesting that task frequency effects become apparent when the model is pushed to its limits.

The researchers conclude that o1, despite its significant improvements over previous LLMs, still exhibits sensitivity to output probability and task frequency. This aligns with the teleological perspective, which considers all optimization processes applied to an AI system. O1’s strong performance on algorithmic tasks reflects its explicit optimization for reasoning. However, the observed behavioural patterns suggest that o1 likely underwent substantial next-word prediction training as well. The researchers propose two potential sources for o1’s probability sensitivity: biases in text generation inherent to systems optimized for statistical prediction, and biases in the development of chains of thought favoring high-probability scenarios. To overcome these limitations, the researchers suggest incorporating model components that do not rely on probabilistic judgments, such as modules executing Python code. Ultimately, while o1 represents a significant advancement in AI capabilities, it still retains traces of its autoregressive training, demonstrating that the path to AGI continues to be influenced by the foundational techniques used in language model development.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.

[ad_2]

Source link